With the development of society and people's increasing attention to hearing-impaired patients, the development of hearing aids has gradually received attention. However, due to the different causes of hearing-impaired patients, there are large differences in hearing loss, which makes each patient have different requirements for the compensation of hearing aids. At present, modern hearing aid technology has entered the era of all-digital hearing aids. At the same time, various digital signal processing algorithms that effectively improve the performance of digital hearing aids have received more attention. The digital hearing aid design based on TMS320VC5416 is proposed to meet the hearing needs of hearing-impaired patients.

l System structure and working principle

1.1 System composition Based on the technical requirements of hearing aids, TI's C54X series products TMS320C5416 (hereinafter referred to as C5416) and digital encoder TLV320AIC23 (hereinafter referred to as AIC23) are selected.

The digital encoder AIC23 is a high-performance stereo audio Codec chip introduced by TI. The A/D conversion and D/A conversion components are integrated inside the chip, using advanced ∑-Δ oversampling technology and built-in headphone output amplifier. The AIC23 DSP Codec operating voltage is compatible with the C5416's core and I/O voltages, enabling seamless connection to the C54x serial port and low power consumption, making the AIC23 an ideal audio analog device for good application. In the design of digital hearing aids.

This article refers to the address: http://

The system structure is shown in Figure 1. It mainly includes DSP module, audio processing module, JTAG interface, storage module and power module. The analog voice signal is input to the AIC-23 through the MIC or IANE IN. After the analog/digital conversion, the C5416 is input through the MCBSP serial port. After the actual required algorithm is processed and compensated, the voice signal required by the hearing impaired patient is obtained, and then the AIC23 is passed. Digital/analog conversion, outputting sound signals through speakers or headphones.

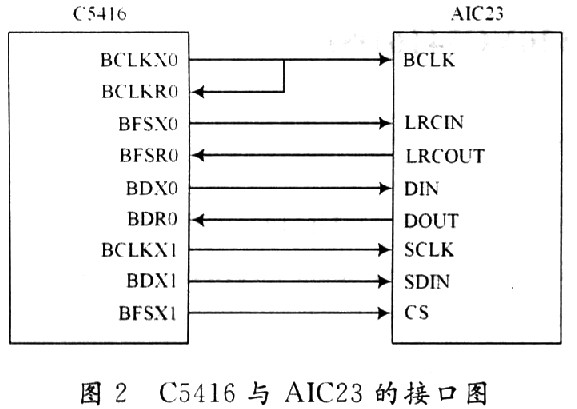

1.2 Interface design of C5416 and AIC23 Figure 2 is the interface schematic diagram of C5416 and AIC23. Since the AIC23 samples the serial data, it is necessary to coordinate the serial transmission protocol of the matched DSP. The MCBSP is most suitable for voice signal transmission. Connect the 22nd pin of the AIC23 to the high level and receive the serial data of the SPI format from the DSP. The digital control interface (SCLK, SDIN, CS) is connected to the MCBSP1. The control word has a total of 16 bits and is transmitted from the high bit. The digital audio ports LRCOUT, LRCIN, DOUT, DIN, BCLK are connected to MCBSP0. In the working mode, DSP is the main mode, and AIC23 is the slave mode, that is, the clock signal of BCLK is generated by the DSP.

The serial clock is connected by BCLKX0 and BCLKR0 in parallel to the BCLK clock of AIC23, so that the serial clock signal can be generated when transmitting and receiving data. The input/output sync signals LRCIN and LRCOUT are used to initiate serial port data transmission and receive the frame sync signal of the DSP.

BFSX0 and BFSR0, BDR0 and BDX0 are connected to the DIN and DOUT of AIC23 respectively to implement digital communication between DSP and AIC23.

2 system implementation

2.1 Basic characteristics of speech Sound is a kind of wave, and the vibration frequency that can be heard by the human ear is 20 Hz to 20 kHz. Speech is a kind of sound. It is a sound made by human vocal organs with certain grammar and meaning. The voice has a vibration frequency of up to 15 kHz.

Voice is divided into different types of motivation: voiced, unvoiced, and plosive. The human voice characteristics are basically determined by factors such as the gene cycle and formants. When a voiced sound is heard, the airflow vibrates the vocal cords through the glottis, producing a quasi-periodic excitation pulse train. The period of this burst is called the "gene cycle" and the reciprocal is the "gene frequency."

Both the human vocal tract and the nasal passage can be regarded as a channel tube with a non-uniform interface. The resonant frequency of the channel tube is called a formant. Changing the shape of the channel produces a different sound. The formant is represented by multiple frequencies that increase in sequence. Such as F1, F2, F3, etc., referred to as the first formant, the second formant, and the like. In order to improve the quality of speech reception, as many formants as possible must be used. In practice, the first three formants are the most important, and the specific situation varies from person to person.

2.2 Speech Enhancement In the actual application environment, speech will be disturbed by environmental noise to varying degrees. Speech enhancement is the processing of noisy speech, reducing the effects of noise and improving the auditory environment.

The interference encountered by actual speech may include the following categories:

(1) Periodic noise: such as electrical interference, interference caused by engine rotation, etc., such interference appears as discrete narrow peaks in the frequency domain. In particular, 50 Hz or 60 Hz hum can cause periodic noise.

(2) Impact noise: such as electric sparks, noise interference caused by discharge, such interference appears in the time domain as a sudden burst of narrow pulses. Eliminating this noise can be done in the time domain by determining the threshold based on the average of the amplitude of the noisy speech signal.

(3) Wideband noise: usually refers to Gaussian noise or white noise, which is characterized by a frequency bandwidth that covers almost the entire voice frequency band. It has many sources, including wind, respiratory noise, and general sources of random noise.

2.3 Algorithm Analysis The influence of noise makes the language recognition rate of patients greatly reduced. Denoising and compensation are important parts of hearing aids. The human ear responds to sounds from 25 to 22 000 Hz. Most of the available information for speech exists only between 200 and 3 500 Hz. According to the human ear perception characteristics and experimental determination, the second resonance peak, which is important for speech perception and speech recognition, is mostly above 1 kHz.

2.3.1 Periodic Noise Cancellation Periodic noise is typically a number of discrete spectral peaks derived from the periodic operation of the engine. Electrical interference, especially 50 to 60 Hz hum, can also cause periodic noise. Therefore, the use of a bandpass filter can effectively eliminate periodic noise and high frequency sound above 3 500 Hz.

IIR digital filters can be used without the help of mature analog filters, such as Butterworth, Chebyshev and elliptical filters, and IIR digital filter linear difference equations:

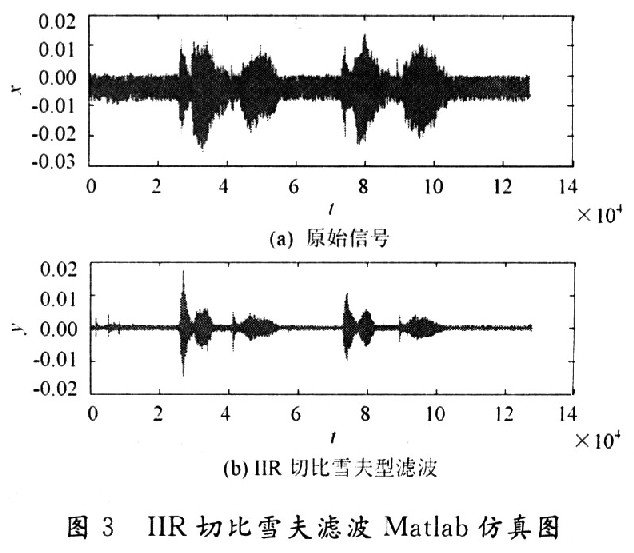

The real-time filtering effect of the filter on the dynamic input data is visualized in the Matlab environment as shown in Figure 3.

2.3.2 Wideband noise removal based on short-time spectrum estimation Since the short-time spectrum of speech signal has strong correlation, and the correlation of noise is weak, the method based on short-time spectrum estimation is used in noisy speech. Estimate the original voice. Moreover, the human ear is not sensitive to the voice phase perception, and the estimated object can be placed on the amplitude of the short time spectrum.

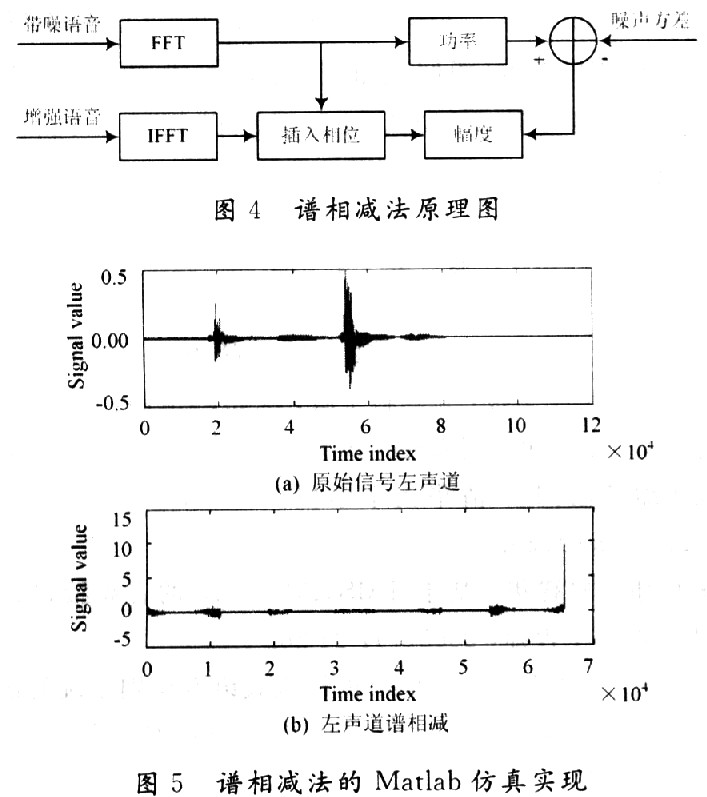

2.3.3 Spectral phase subtraction method The subtraction method is an effective method in a single-microphone recording system without a reference source. Since the noise is locally stationary, it can be considered that the noise of the strong speech is the same as the noise power spectrum during the speech, so the "quiet frame" before and after the speech is used to estimate the noise.

The principle block diagram and simulation results of the spectral phase subtraction method are shown in Fig. 4 and Fig. 5. After the window signal processing is performed on the speech signal, the signal is denoised by using the known noise power spectrum information.

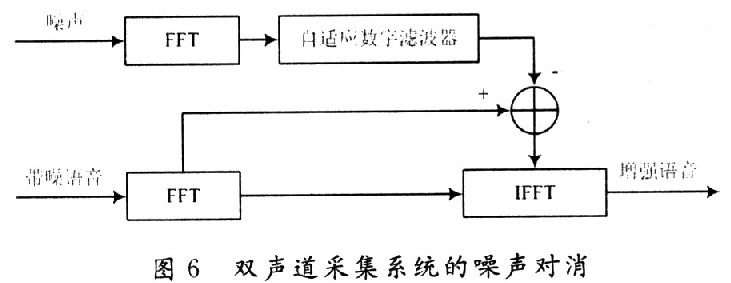

2.4 Noise Cancellation Noise cancellation is the most basic subtraction algorithm. Its basic principle is to subtract noise directly from noisy speech. Since broadband noise and speech signals completely overlap in the time domain and the frequency domain, it is more difficult to remove. Therefore, nonlinear processing is required, and the adaptive filter is continuously adjusted.

In Figure 6, one channel collects noisy speech and the other channel collects noise. The noisy speech sequence S(n) and the noise sequence d(n) are Fourier transformed to obtain the spectral components Sk(w) and Dk(w), and the noise component Dk(w) is filtered and subtracted from the noisy speech. The phase of the noisy speech is added to the time domain signal by inverse Fourier transform. In the case of a strong noise background, this method can achieve a good noise canceling effect.

In practice, the two acquisition channels must be isolated to prevent noisy speech on both channels. In order to make the collected noise closer to the noise in the noisy speech, the adaptive filter can do this well.

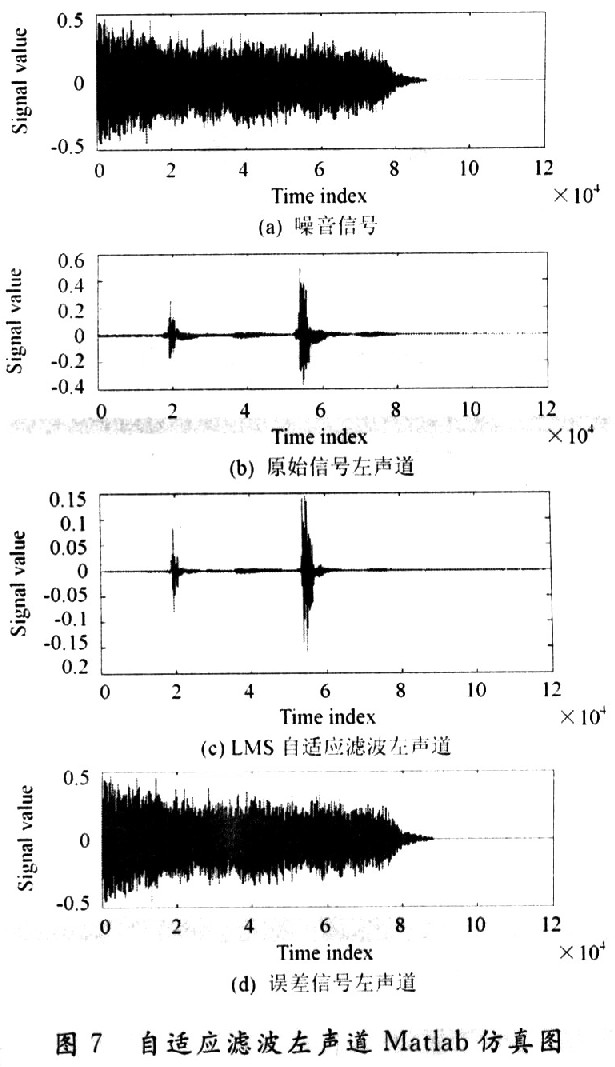

Fig. 7 is an example of enhanced speech of the left channel obtained by the noise cancellation method.

2.4.1 Multi-channel compression algorithm In the case of hearing loss, the hearing threshold is generally shifted down, resulting in a decrease in the auditory dynamic range. The degree of reduction of this dynamic range is related to the frequency, and the loss of the high frequency portion is generally large. Among the digital hearing aid signal processing algorithms, the hearing compensation algorithm is one of the most core algorithms. The purpose of the hearing compensation algorithm is to compress and amplify the sound, map the sound within the normal human hearing threshold to the human hearing domain, and maintain the hearing comfort and improve the clarity and recognition of the sound as much as possible.

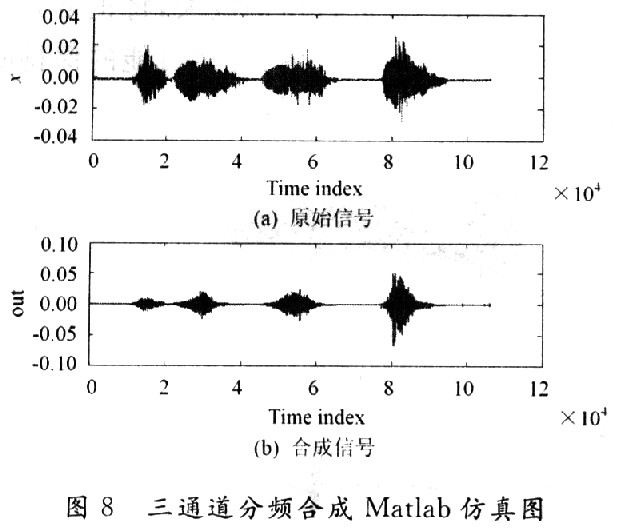

The signal is processed by frequency division and then synthesized, and the sound signal is divided into several independent frequency regions, which are called channels. The algorithm focuses on processing signals in the time domain. In each channel, according to the patient's hearing impairment, different frequency bands are amplified differently, different compression algorithms are used for different frequency components, and finally the synthesized sound is sent to the patient's ear canal. Here, the method is applied to the signal to do certain processing. In the system, the intermediate frequency signal is properly amplified, and the receiving effect is good. Figure 8 is a three-channel frequency division composite diagram.

2.5 System Implementation When the system is implemented, the target board and the PC are connected through the USB interface. Online debugging of the target project through CCS.

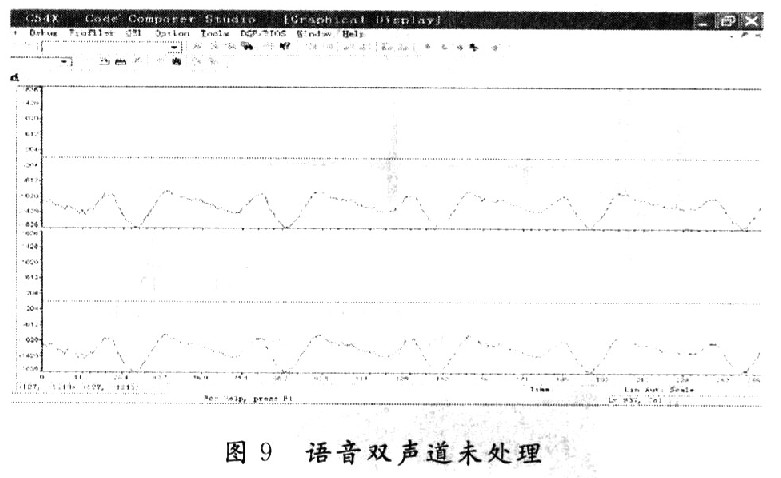

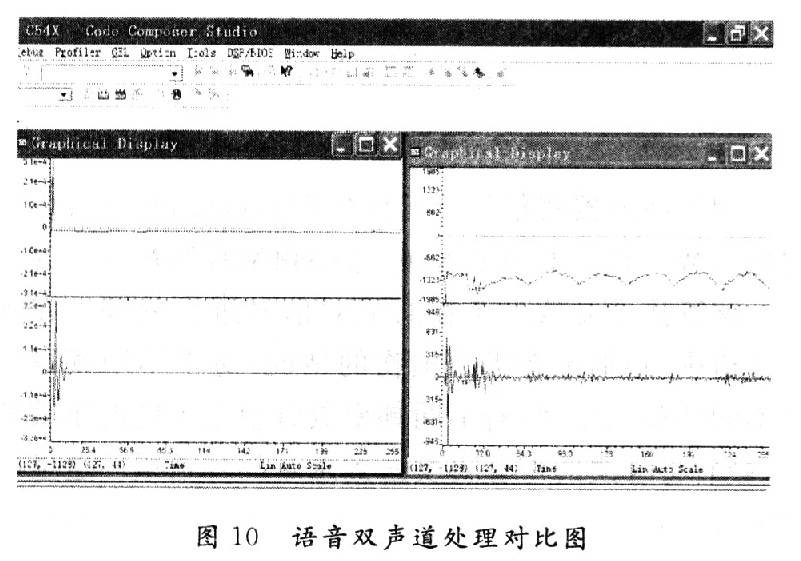

The main tasks of the target project are TMS320C5416 initialization, management of resources on the board and processing algorithms for completing audio. To properly program the sampled and output audio signals, each channel of the TMS320C5416's McBSP must be properly configured with 27 associated registers to meet the various timing requirements of the TMs320C5416 and other hardware circuit chips (bit synchronization, frame synchronization). , clock signal, etc.). Figure 9 is a playback picture of the original speech signal in the system, and Figure 10 is a comparison of the original speech and the processed speech connected between the CCS and the DSP hardware.

3 Conclusion The hearing aid designed for this topic has been miniaturized, integrated and convenient. The system can also be modified and designed according to the specific needs of the patient to meet the needs of different patients. With the development of society, in some specific situations, not only people with hearing impairment, but also those with normal hearing also need hearing aids. Human needs for hearing aids will be constantly updated. The exploration and research of problems will also keep pace with the times. The use of hearing aids will better serve human beings and achieve harmony between man and nature, thus promoting the harmonious development of society.

Data Acquisition Adcs Dacs,Ics Data Acquisition Adcs/Dacs,Data Acquisition Adc / Dac Professional,Ic Chip Data Acquisition Adcs/Dacs

Shenzhen Kaixuanye Technology Co., Ltd. , https://www.icoilne.com