Editor's note: The most closely watched technology is none other than artificial intelligence, but Apple, the world’s most expensive company, appears to be indifferent and is considered to be seriously behind in the field of artificial intelligence. In addition to voice assistant Siri, there seems to be no more. But the real situation may be completely different from what the outside world guesses. Backchannel chief editor Steven Levy recently visited Apple and found that the company actually used the fashionable deep learning technology before the industry and used it in all aspects except Siri. After reading this article, you can quickly understand which Apple products have been invaded by machine learning, why it can secretly develop new technologies for many years, what challenges machine learning has brought to its culture and principles, and how it has “worked against†the mainstream industry...

This article was compiled from backchannel and was jointly completed by Lei Feng Net authors Zhang Chi, Jia Xin and Jasper.

one

On July 30, 2014, Siri ushered in a brain transplant.

Three years ago, Apple was the first mainstream company to integrate smart assistants into its operating systems. Siri is an improvement of Apple's acquisition of an independent application. It also swallowed the development team in 2010. For Siri, the initial evaluation was gratifying, but in the coming months to years, users became increasingly impatient with its shortcomings. It often misunderstood the instructions, and no adjustments were made.

So on the date mentioned above, Apple transplanted Siri's speech recognition to a neural network-based system. This service was first faced to U.S. users and was introduced to the world on August 15th. Some early techniques are still useful, including Hidden Markov models, but now the system uses machine learning techniques, including DNN (deep neural network), convolutional neural networks, long and short-term memory units, gated recurrent units. , And n-grams and so on. After the user upgrades, Siri still looks the same, but after deep learning has been strengthened.

Like other bottom-line improvements, Apple did not announce Siri’s progress because it did not want to reveal itself to competitors. If the user notices anything, it's just that it has made fewer mistakes. Apple also said that the accuracy improvement is shocking.

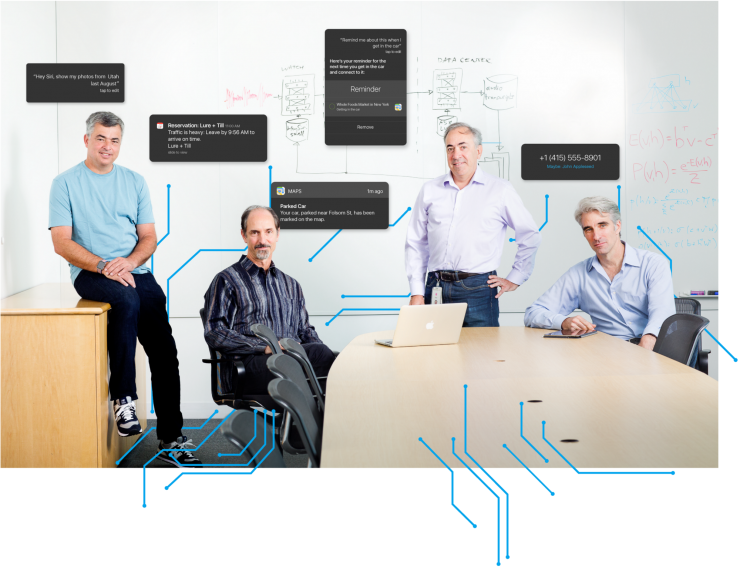

Eddy Cue

Eddy Cue, senior vice president of Apple's Internet Software and Services Department, said: "The improvement is so obvious that it has been retested to ensure that no one is counting on the wrong number."

The story of Siri's transformation will make people in the field of artificial intelligence frown, not because of the improvement of the neural network system, but because Apple is so skilled and so low-key to the technology. Until recently, although Apple had increased recruitment efforts in the AI ​​field and made some high-profile acquisitions, the outside world still considered it slightly behind in the fiercest AI competition. Since Apple has been tight-lipped, even AI connoisseurs do not know what it does in machine learning. Jerry Kaplan, who teaches the history of artificial intelligence at Stanford, said that "Apple is not part of the community, like the NSA (National Security Agency) in the AI ​​field." It is generally believed that if Apple's efforts are as serious as Google and Facebook, it should be known to the outside world.

Oren Etzioni of Allan AI Institute stated that “Google, Facebook and Microsoft have top machine learning talents. Apple does hire some people, but who of the top five machine learning students works for Apple? Apple has voice recognition technology, but What else can machine learning help?"

two

However, just earlier this month, Apple secretly demonstrated the application of machine learning on its own products. But it was not shown to Oren Etzioni but it was shown to me. On the same day, I spent most of my time in the headquarters building of Apple's Cupertino spacecraft. Accompanied by Apple executives, I experienced a close connection between Apple's products and machine learning. (Executives include Eddy Cue, vice president and head of marketing Phil Schiller, and software director and senior vice president Craig Federighi) Also present are experts responsible for developing Siri. When we were all seated, they showed me a two-page machine learning application, some of which were products or services that were already in use, and some that were still under discussion.

If you are an iPhone user, you probably have benefited from the improvement of user experience brought by machine learning. But contrary to intuition, machine learning does not only apply to Siri. Identify unknown calls, list your most frequently used apps after unlocking, or mark an appointment in the reminder (but you didn't put it in the calendar), and automatically display nearby hotels that are marked in Apple. After fully embracing machine learning and neural networks, we can do even better.

Yes, this is the legendary "Apple Brain" that has been built into your iPhone.

Neural network facial recognition

Neural network facial recognition

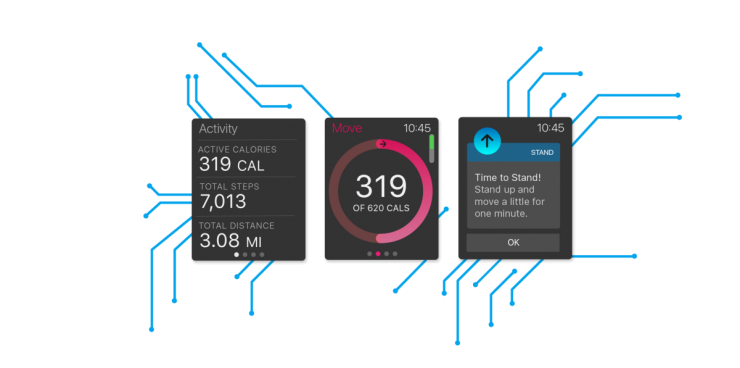

"Machine learning," an expert said, "is everywhere in Apple's products and services." The Apple Store uses deep learning to identify fraudulent behaviors, and feedback received by the public beta operating system is filtered using artificial intelligence to find useful feedback reports. There is also the Apple News app that uses machine learning to pick out news sources that may be of interest to you. Apple Watch also uses machine learning to detect whether the user is exercising or just hanging out. There is the well-known camera face recognition, iPhone already equipped with this technology. In the case of weak Wi-Fi signals, iOS will also recommend that you use a cellular network for battery power considerations. It can even tell whether the video is good or bad, and quickly click on a button to quickly clip a group of related videos together. Of course, these Apple's rivals do a good job, but executives stressed that Apple is the only company that balances user privacy and user experience. Of course, to achieve this standard on iOS devices, only Apple can do it.

For Apple, artificial intelligence is not new. Back in the 90s of last century, when Apple launched the Newton tablet, the matching stylus used a certain degree of artificial intelligence to identify the characters entered by the user. The research results are still glowing for the Apple Empire, the Chinese character recognition system on the Apple Watch. This system allows the user to input extremely scribbled strokes and still accurately identify. (These functions have been developed by a unified machine learning team for decades.) Of course, the early machine learning was extremely primitive, and the current deep learning is still awkward at the time. Now that artificial intelligence and machine learning have become a must-see expression, Apple has been criticized in this area. In recent weeks, Tim Cook finally spoke, saying that Apple is not lacking in artificial intelligence, only less publicity (press: see Lei Feng network (search "Lei Feng Net" public concern) report). Now, executives have finally changed their minds to do things in order to make Apple's achievements in artificial intelligence public.

Machine Learning Health App for Apple Watch

“Apple has grown rapidly in the past five years,†Phil Schiller said. “Our products are also improving very quickly. The A-series processing chips have a notable performance breakthrough every year, which makes us have more abundant performance. More and more machine learning technologies are being applied to end products. Machine learning has many good things, and we have the ability to use them well."

Even though Apple's enthusiasm for embracing machine learning is no less than that of any Silicon Valley technology company, their use of machine learning is still restrained. This genius of Cupertino does not believe that machine learning is a panacea for all problems. Artificial intelligence is the future way of interaction, but touch screens, tablet computers, and object-oriented programming have played the same role for a certain period of time. In Apple's opinion, machine learning is not what other companies say, and it is the ultimate answer to human-computer interaction. "Artificial intelligence is not fundamentally different from the previous mediums that changed human-computer interaction," said Eddy Cue. Apple is also not interested in whether the machine will replace the cliché discussion of human beings. As expected, Apple did not admit to building a car plan, nor did it talk about rumors of home-made TV shows, but Apple's engineers made it clear that they would not create anything like Skynet.

"We use technology to solve things we couldn't do before and we have improved the old paradigm," Schiller said. "We make sure that every technology can be applied to the product in the most Apple way."

Later, they carried out further explanations on the above viewpoint. For example, to what extent does artificial intelligence reshape Apple's ecosystem. The original intention of Apple's research and development of artificial intelligence is to make up for the lack of user experience caused by lack of search engines. (Search engines can train neural networks to make them mature quickly.) Executives once again emphasized Apple’s determination to ensure user privacy. (Even if it would limit the use of user data and hinder the effectiveness of machine learning.) Executives emphasized that these obstacles are not insurmountable.

How big is this "brain"? How many user data caches on the iPhone are available for machine learning calls? The engineer's answer surprised me: "The average 200Mb depends on the amount of user information." (In order to save storage space, the cache will be cleaned up from time to time.) This information includes the use of the application habits, interaction with others, neural network processing, as well as "natural language model." There are also object recognition, face recognition, scene recognition, etc. for neural network learning.

For Apple, this data is your private information and will not be uploaded to the web or cloud.

three

Although Apple did not give any explanation for its efforts in artificial intelligence, I succeeded in obtaining a resolution on how to allocate machine learning technology within the company. Its machine learning intelligence can be shared across the company, and the company encourages production teams to use this technology to solve problems and invent some more distinctive personalized products. “At Apple, we did not have an organization that solely focused on machine learning technology,†Craig Federighi said. “We tried to maintain close cooperation among the teams and tried to apply this technology to create a good user experience.â€

So how many people in Apple are engaged in machine learning work? "There are many," Federighi said after receiving some stimuli. (If you think he will tell me specific numbers, then you don't understand Apple yet.) What's interesting is that many people who are responsible for the learning of the Mac machines haven't received necessary training in this area before entering Apple . “The people we employ are people who are very powerful in some basic areas, like mathematics, statistics, programming languages, cryptography, etc.†Federighi said: “The results show that these core intelligences can be perfectly converted into machines. Learning intelligence. Although we do employ many machine learning talents, we still hope to find talents with good core qualifications and talents."

Craig Federighi (left) and Alex Acero

Although Federighi did not say, but this approach seems inevitable: Apple likes to keep confidential, and competitors are encouraging computer scientists to share their research on a global scale, so that Apple will be at a disadvantage. "Our practice is more inclined to strengthen natural selection - it is actually a confrontation between two different types of people. One likes to create great products through teamwork, and the other is to publish products and technologies as Their primary motivation," Federighi said. If scientists have achieved a major breakthrough in this area while improving the performance of a certain Apple product, that would be great. "But it is the illusion of the final result that has provided us with tremendous power," said Cue.

Some of Apple's talents in this area also come from continuous acquisitions. "In the past year, we have purchased 20 to 30 companies. These are relatively small companies that really need manpower," said Cue. “When Apple buys an AI company, there will certainly be a large number of machine learning researchers here, but we will not be stable to these people.†Federighi said: “We are concerned about those who are very talented, but they can really People who focus on achieving a great experience."

The most recent acquisition was the Turi company in Seattle. Apple eventually acquired it for $200 million. The company established a machine learning toolkit and has been compared to Google’s TensorFlow. The acquisition provides Apple with a different idea that it can be used for similar purposes, both for the company and for the developer. "What is certain is that some of their things are very much in line with Apple, both technically and personally," Cue said. In a year or two, perhaps we can figure out what happened. Apple bought Cue, a small startup in 2013, and Siri began to show some predictive power.

Regardless of where these talents come from, Apple’s artificial intelligence infrastructure has helped it develop new products and features that were impossible to achieve by previous means. This is changing the company's product roadmap. "Now in Apple, the idea of ​​coolness is endless and endless." Schiller said: "Machine learning is giving us a positive opinion on things that we can absolutely say for the past few years. It is constantly deepening into our decision-making and determines the direction of our next batch of products."

iPad Pro's Apple Pencil is an example. In order to invent a high-tech stylus, Apple had to face the problem that when people write on the device, the bottom of their palms will inevitably rub to the screen, causing various touch failures. At this time, using a machine-learning mode, such as "handslide prevention", can solve this problem very well. Because this mode can make the screen sensor feel scratch, the difference between the touch and the brush stroke greatly improves the accuracy of the stylus control. "If the stylus doesn't work perfectly on the iPad, then the iPad can't be considered a good piece of paper for me to continue writing. Pencil wouldn't be a good product, either," Federighi said. So if you love Apple Pencil, thank you for your machine learning.

four

The best way to measure the progress of Apple's machine learning may come from its most important acquisition at AI: Siri. Siri was originally born from DARPA's plan for an assistant. Later, some scientists established a company and developed an application using the same technology. In 2010, Jobs personally convinced founding members of the company to sell the company to Apple and instructed Siri to integrate it into the operating system. Siri was a big hit at the iPhone 4S conference in October 2011. Now it is no longer the user's long press of the Home button, or the "Hey, Siri" command to wake up (this feature itself also uses machine learning, allowing the iPhone to learn about the surroundings without power consumption). Siri's intelligence integrates into Apple Brain and works even when he is not in the game.

As a core product, Cue mentioned four components: speech recognition (understanding when you talk to it), natural language understanding (understanding the content of speech), performing (satisfying queries or requests), and responding (generating responses). "Machine learning has an important influence on all of these."

Tom Gruber (upper) and Alex Acero

Tom Gruber, senior director of Siri research and development at Siri, joined Apple after the initial acquisition. He said that before Apple used neural networks for Siri, its user volume was already generating a lot of data, which is very important for training neural networks. "Jobs said that there will be millions of users overnight and there is no need for open beta. Suddenly there will be users who will tell you how people talk to applications. This is the first revolution, then the era of the neural network." Arrived."

As Siri moved to use neural networks to handle speech recognition, there were several AI experts, including Alex Acero, now head of the speech group. Acero's speech recognition experience began in the 1990s with Apple, and later worked for Microsoft Research for many years. “I like this type of work and have published many papers. When Siri appeared, I realized that this was an opportunity for deep neural network applications to be realized, not for hundreds of people, but for millions of people.†In other words, he is the type of scientist Apple is looking for - giving priority to products rather than publishing papers.

When Acero joined three years ago, the voice technology used by Siri still basically came from third-party authorization, and this situation must change. Federighi realized that this is a pattern that Apple continues to repeat. “As a technology becomes more and more important for the development of core products, we will let the internal gradually take over the development. To develop great products, we want to have internal technology, and innovate internally, voice recognition is a good example ."

The team began training neural networks to replace Siri's earlier technology. Apple's GPU clusters are constantly running, and a large number of calls are made. The July 2014 release proved that all efforts were not in vain.

Acero said, "At that time, the error rate was reduced twice in all languages, and in many scenarios it was not only that. It was all due to deep learning and optimization of it, not only in terms of algorithms, but also throughout product development. Process."

Apple was not the first company to use DNN in speech recognition, but it proved that controlling the entire operating system would have an advantage. Acero said that because of Apple's own design of the chip, he can directly work with the chip design team engineers who write the firmware to maximize the performance of the neural network. The needs of the Siri team have even affected all aspects of iPhone design.

Fdferighi said, "Not only the chip, but also the microphone on the device, the location of the microphone, and the software stack for how to adjust the hardware and process the audio. This requires the coordination of all the components, compared to the company that just developed the software. There are amazing advantages."

Another advantage is that when Apple's neural network is successful on one product, it can also become the core technology of other products. Machine learning enabled Siri to understand the user and also changed the input mode from manual to dictation. It is also because of Siri's technology that the user's speech input information becomes smoother and more complete.

The second part of Siri that Cue mentioned is natural language understanding. Siri began using machine learning to understand user intent in November 2014 and launched a deep learning version a year later. As in speech recognition, machine learning enhances the experience, especially in understanding instructions.

Apple believes that without Siri's technology, it is unlikely to develop the latest version of Apple TV because the latter also has voice control capabilities. Although the earlier Siri version required you to speak in clear ways, Deep Learning Plus not only finds specific choices from a large number of movies and music, but also handles “playing an excellent thriller starring Tom Hanksâ€. the concept of. This was completely impossible before.

In iOS 10, which is about to be officially released, Siri's voice is the last part that has been transformed by machine learning. Similarly, deep neural networks replace the original licensed technology. Siri's voice comes from a voice database collected by a voice center. Each sentence is the result of a speech segment collage. Machine learning makes the speech smooth and sounds more like a real person.

This may seem like a small detail, but a more natural sound can make a big difference to Siri. Gruber thinks, "If the sound quality is higher, people will feel more trustworthy. Better speech can attract users and make them use it more often."

The willingness to use Siri and the technological advancement of machine learning are all important in Apple's opening up of Siri to developers. Many people noticed that Apple's partnership on Siri was only double digits, far behind Amazon's Alexa, who claimed that external developers helped develop more than 1,000 skills. Apple thinks this comparison is meaningless because Amazon users can only use those skills if they want to use specific instructions. Apple said that Siri will be more natural with the integration of services such as Uber and SquareCash.

At the same time, Apple's improvements to Siri have also been rewarded. Users have discovered new features, and have also found that commonly used queries have become more accurate, and accordingly, the number of queries has also grown.

Fives

Perhaps the biggest problem Apple has encountered in using machine learning technology is how to insist on the principle of protecting user privacy. Apple encrypts user information and is not readable by anyone, including a company lawyer. The FBI cannot, even if it is approved (by: about the battle between Apple and the FBI, you can read Lei Feng network interpretation). It also stated that it will not collect user information for advertising purposes.

From the user's point of view, this behavior is worthy of respect, but it does not help attract top AI talent. An Apple former employee said, “Machine learning experts want data. But for the sake of protecting privacy, Apple will always intervene. Whether this approach is correct is not discussed, but the outside world will think that Apple is not hardcore AI powder. ."

Apple executives do not agree with this view. They believe that there is no need to put user information in the cloud, or to store data used to train neural networks, but also to improve the performance of machine learning. Federighi believes that “there are always wrong views and wrong compromises. We want them to get on track.â€

There are two problems here. The first involves dealing with personal information in a machine learning system. What happens when personal details are collected by a neural network? The second involves collecting the data needed to train the neural network to identify behaviors. Without collecting personal information, how do you train?

Apple has answers to both. Cue said, "Some people think that we can't do these things with AI because there is no data. But we have found ways to get the data we need while protecting privacy. This is our bottom line."

For the first question, Apple's solution is to use its unique control over hardware and software. In simple terms, most personal information remains in Apple Brain. Federighi said, "We will keep some of the most sensitive information on the device, then machine learning is completely run locally." He gives an example of application recommendations, that is, icons that appear when the right slides on the home screen. Under the state of understanding, these applications are what you intend to use. This prediction is based on many factors and is basically related to the user's behavior. This feature is really useful, Federighi said, predicting that users want to use the icon has a probability of 90%.

Other information Apple has on the device may include the most personal information: the text entered by the user using the iPhone keyboard. Using a neural network trained system, Apple can identify key events and items such as flight information, contacts, and appointments. However, this information exists on the phone. Even if the information is backed up on the Apple Cloud, it will not be restored by just the backup information after processing. "We don't want to put information on the Apple server. The company doesn't need to know your hobbies or where you are."

Apple is also minimizing the overall preservation of information. One example is that someone may mention a word in a conversation, which may require searching. Other companies are likely to analyze the entire conversation in the cloud to identify those words, but Apple devices can identify them without the need for such data to be removed from the user. This is because the system will constantly search and match with the knowledge base in the mobile phone.

Federight said, "The knowledge base is very concise, but it is also quite perfect, storing thousands of locations and entities." All Apple applications can use the knowledge base, including Spotlight search applications, maps and browsers. It also helps with automatic error correction and is always running in the background.

One question in the machine learning circle is whether Apple's privacy restrictions will hinder neural network algorithms, which is also the second issue mentioned above. After a lot of data training, neural networks can be accurate. If Apple does not collect user behavior data, where does it get data? Like other companies, Apple trains neural networks with public data sets, but there is always a need to update more accurate data, which can only come from users. Apple's approach is to collect information without knowing who the user is. It handles data anonymously and randomly identifies it.

Starting from iOS 10, Apple will start using a new technology called Differential Privacy, which will crowdsource information and make personal identities unrecognizable. This technique may be used when a new buzzword occurs and it is not in the Apple Knowledge Base; it may also be used when a link suddenly becomes relevant to the answer to a related query, or when an expression is heavily used. "The traditional way would be to pass the user each input to the server and then traverse the data to find something of interest. But we have end-to-end encryption and will not do this." Although differential privacy is a more academic word, But Apple wants it to become more popular.

Federighi said, "We developed and researched a few years ago and made interesting results that can be used in a wide range. Its privacy is amazing." In simple terms, differential privacy is the addition of mathematical noise to several segments of data. Apple can identify usage patterns without recognizing personal identities. Apple also authorizes technology researchers who study related technologies to publish their papers and announce their work.

six

Obviously, machine learning has changed all aspects of Apple products, but for Apple itself, what changes in machine learning remains to be seen. From a sensory point of view, machine learning seems to be incompatible with Apple's temperament. Apple likes to take full control of the user experience. Everything is designed in advance for you and the code is optimized. But the use of machine learning means that part of the decision-making power should be handed over to software. Gradually give the user experience to the machine control. Can Apple accept this setting?

“This incident has caused endless internal debates,†Faderighi said. “We have had very in-depth thinking about this. In the past we used experience to control the details of human-computer interaction from multiple dimensions in order to achieve the best User experience. But if you start training your machine to simulate human behavior with large amounts of data, the result is no longer what Apple designers are good at. All comes from data."

But Apple did not look back, Schiller said, "Despite this technology will change the way we work, but in order to make higher quality products, we will eventually go further and further on this road."

Perhaps this is the answer to the question: Apple will not be fanfare about its advanced machine learning technology, but they will still use it as much as possible in order to have a better user experience. Apple Brain hiding in your iPhone is the best proof.

“Typical Apple users will unknowingly get the enhanced user experience brought by machine learning, and therefore fall in love with Apple products.†Schiller said. "What's most exciting is that you don't even feel it's there until one day you suddenly realize it and send a sincere sigh: "How did this happen? â€

Skynet will not arrive.