Lei Feng.com: This article was translated by Tupu Technology Engineer from "Facebook's New AI Can Paint, But Google's Knows How to Party" . Lei Feng network exclusive article.

Facebook and Google are building huge neural networks - these artificial brains can instantly recognize faces, cars, buildings and other objects in digital photographs, but they can do more than that.

They can recognize spoken words and translate one language into another; they can also identify advertisements, or teach robots to place screw caps in bottles. If you turn these brains upside down, not only can you teach them to recognize images, but you can also describe the images in a rather interesting (but sometimes disturbing) way.

Facebook has revealed that they are training their neural networks to automatically depict small images containing objects such as airplanes, cars, and animals, with a probability of approximately 40%. These images allow us to think that they are real. “This model can distinguish between images you would like to shoot with your phone and other unnatural images — such as white snowflakes on your TV, or some sort of abstract art image,†says Facebook's artificial intelligence researcher Fergus. "It understands the structure of the image composition" (above).

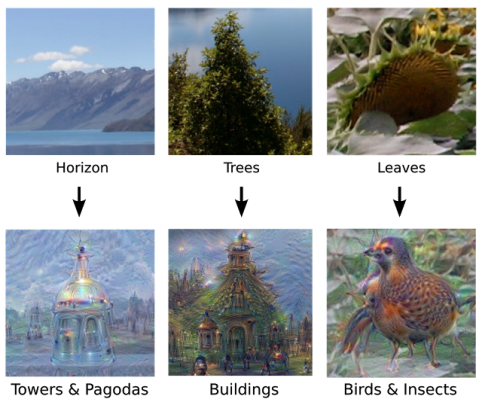

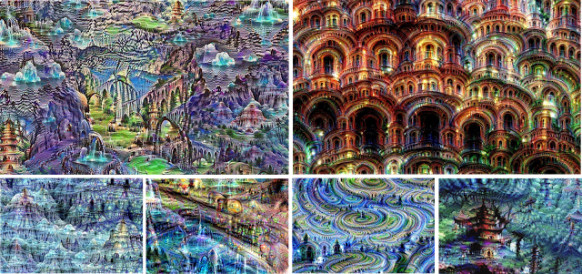

At the same time, Google's researchers have pushed things to the other extreme - they use neural networks to turn real photos into unreal but interesting images. They train machines to look for common patterns in photos, reinforce them, and repeat the process with the same image. "This will create a feedback loop: if a cloud looks a bit like a bird, our neural network will make it look more like a bird," Google explained the project in a blog post. “And when the modified image passes through the network again, the network can more accurately identify the 'bird' in the image. At the end, the image of a bird appears to have appeared out of thin air.†The result of this process is a machine. Generated abstract art (see below).

Google's neural network can see the outline of the tower on the horizon, and then enhance the outline of the line until a complete image appears.

To some extent, these are just tricks to show off—especially Google's feedback loop that causes illusions to reproduce. And it is worth noting that Facebook's fake picture is only 64*64 pixels. But to another extent, these items can be used to optimize neural networks and make them close to humans. David Luan, CEO of a computer vision company called Dextro, said: "This work helps to better visualize how our network is learning."

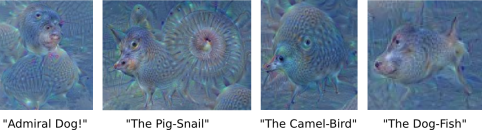

These results are also somewhat disturbing. It's not just because Google's image production is like taking a lot of hallucinogens, like birds and camels, or crossbreeding of snails and pigs (see below). What's more, they let us see that one machine can use one The ways we can't realize control our world of what we see and hear – a world where real and illusion are intertwined .

Fergus and Facebook's two other researchers published papers in the academic document library arXiv.org and introduced the image generation model—the results they accomplished with the doctoral students at New York University's Kurang Institute of Mathematical Sciences. The system uses two neural networks to compete with each other. One network is used to identify natural images and the other to deceive the first one as much as possible.

Yann LeCun is the head of the Facebook artificial intelligence laboratory, and he calls this a confrontational training. "They are playing against each other," he said as he talked about the two networks. "One tries to deceive the other, and the other tries not to be deceived." The result is a system that produces very realistic images.

LeCun and Fergus believe that this result can be used to restore the degraded picture into a real picture. "You can restore an image to a natural image," Fergus said. However, they believe that, more importantly, the system can take a step toward “unsupervised machine learningâ€. In other words, this achievement can help the machine to learn without the explicit guidance of human researchers.

In the end, LeCun said that as long as there is a group of “unmarked†example images, you can use this model to train the image recognition system — which means that humans do not need to browse through training images and identify what is in the images. And use text to explain. "The machine can learn the structure of the image without knowing the content of the image," he said.

Luan pointed out that the current system still needs some supervision. But he called Facebook's paper "elegant work," and he believes it can help us understand the behavior of neural networks just as Google is doing.

Middle layerThe neural networks created by Facebook and Google consist of many layers of neurons, each of which works in conjunction with other neurons. Although these neurons perform some tasks very well, we do not quite understand what is behind them. "One of the challenges of researching neural networks is understanding what's going on at each level," Google said on his blog (they refuse to discuss their image creation work further).

Google explained that by turning the neural networks upside down and teaching them to generate images, they can better understand how the neural network works. Google asked its network to zoom in on what it found in the image. Sometimes, they just magnify the edges of a shape. At other times, they magnify more complex things, such as the outline of a tower on the horizon, a building on a tree, or random noise (see above). But in each case, researchers can better understand what the network is seeing.

Google said that "this technology provides us with a qualitative sense that helps us understand the role of each abstraction of the neural network in its understanding of the image." It helps researchers “visualize how neural networks can perform difficult classification tasks, optimize network architecture, and examine what the network learned during training.â€

In addition, like Facebook's work, this achievement is a bit cool, a little weird, and a bit scary. It seems that the better the computer can recognize images, the more unfavorable it is for us.

Lei Feng Network (Search "Lei Feng Network" public concern) Note: Lei Feng Network exclusive article, reproduced please contact the authorizer and marked the source and author, not to delete the content.