This article comes from Lorenzo Miniero, the co-founder of Meetecho, who shared how to use Firefox and WebRTC for YouTube live streaming. Meetecho is the production company of the famous WebRTC server Janus. LiveVideoStack excerpts the original text.

We have all recently seen news about YouTube's live broadcast via WebRTC, but it only applies to you using Google Chrome. Neither Firefox nor Edge is applicable. For the Apple browser, to be honest, I don’t care much...

What work do I need to do so that Firefox can send content via WebRTC and watch the live broadcast it pushes to YouTube? Maybe some HTML5 canvas stuff can add some fun. With the opening of the season of Kamailio World Dangerous Demos, this became a great opportunity to fix it, which is exactly what I did!

What I need is:

A way to capture the video in a browser, then edit it in some way, and use it in PeerConnection of WebRTC;

The WebRTC server receives the stream from the browser;

Some technology converts the stream to make YouTube's live broadcast more complete.

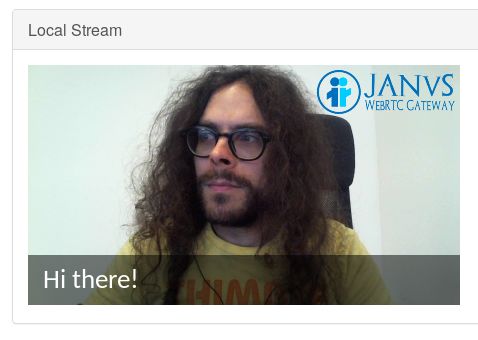

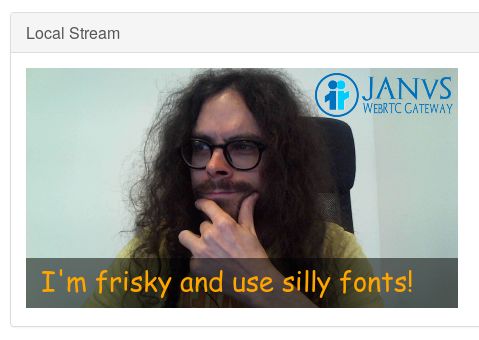

The first part is very interesting because I have never done this before. Or rather, in the past few years, I have captured and published a large number of WebRTC streams, but I have never tried to capture video on the browser side. I know you might use some HTML5 canvas elements, but I have never used it, so I decided to do so now. And friends, it’s really fun! It is basically summarized as the following steps:

Create an HTML5 canvas element to draw;

Get the media stream through the usual getUserMedia;

Put the media stream into an HTML5 video element;

Start drawing the video frame in the canvas, plus other elements that may be good (text overlay, image, etc.);

Use captureStream() to get a new media stream from the canvas;

Use the new media stream as the source of the new PeerConnection;

Continue to draw on the canvas, as if there is no end!

It sounds like there are many steps, but they are actually very easy to set up and complete. In just a few minutes, I had some basic code that allowed me to capture my webcam and add some overlays to it: a logo in the upper right corner, a translucent bar at the bottom, and some text The superposition. I also made dynamic changes in the modified code so that I can update them dynamically. I believe that many of you who have used canvas before will laugh at how absurd these examples are, but for me who just started, this is a great achievement!

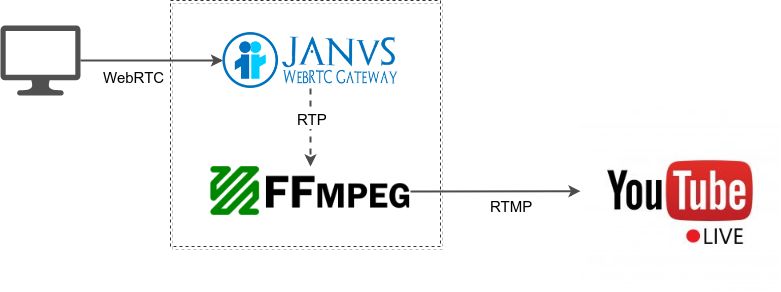

Anyway, the coolest part is that I did some basic video editing work in the test page, and the method to use it as a PeerConnection source. The next step is to stream this WebRTC to the server for me to play it. Not surprisingly, I used Janus for the purpose...The idea is simple: I need something that can receive WebRTC streams, and then be able to use it in other places. Considering that this was one of the key reasons why I started studying Janus a few years ago, it was a perfect choice a few years ago! Specifically, I decided to use the Janus VideoRoom plugin. In fact, as expected, I needed a way to provide the incoming WebRTC stream to an external component for processing, in this case, to convert it to the format expected by the YouTube live broadcast for publishing. This VideoRoom plug-in, compared with the integrated SFU function, has a nice function called "RTP repeater", which is completely allowed. I will explain the reason and how it works later.

Finally, I need something to convert the WebRTC stream to the format expected by the YouTube live broadcast. As you may know, the traditional method is to use RTMP. There are several different softwares that can help solve this problem, but I chose a simple way, using FFmpeg to complete the work: in fact, I don’t need any editing or publishing functions (I have implemented these), but only a few things It can be converted into the correct protocol and codec, which FFmpeg is very good at. Obviously, in order to achieve this, I first need to push the WebRTC stream to FFmpeg, where the above-mentioned "RTP transponder" can help. Specifically, as the name suggests, "RTP repeaters" can simply forward RTP packets somewhere: in the Janus VideoRoom article, they provide a way to use normal (or encrypted, if needed) RTP from The media data packets of the WebRTC publisher are forwarded to one or more remote addresses. Since FFmpeg supports ordinary RTP as the input format (using an SDP type to bind to the correct port and specify the audio/video codec being used), this is the best way to provide it using WebRTC media streams!

At this point, I got everything I needed:

Browser as editing/publishing software (canvas + WebRTC);

Janus as a medium (WebRTC-to-RTP);

FFmpeg acts as a transcoder (RTP-to-RTMP).

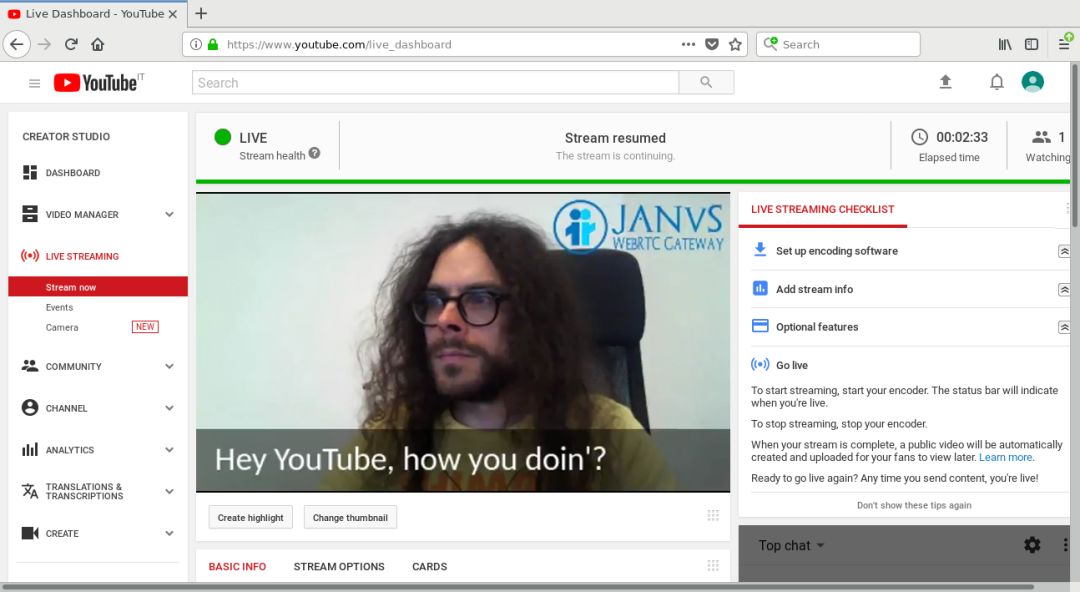

In other words, the last step is to test all of these. In the local test, all of this worked as expected. In the test, the excellent old red5 was used as an open source RTMP server, but it was clear that the real challenge was to make it work with YouTube live broadcasts. So I went to the control panel of Meetecho's YouTube account to verify it, and waited for the usual 24 hours to get the necessary information to publish the stream. These basically include the RTMP server to connect to, and the unique (and secret) key used to identify the stream.

By searching around, I found some nice code snippets showing how to use FFmpeg to stream to YouTube Live. I modified the script to use my source and target information to publish there instead of my local RTMP On the server. Happily, I got it to work, but it doesn't always work perfectly. There are always some problems in some places, but for a demo, it already works very well.

That's it, really, no other "magic" is needed. This can be easily turned into a variety of services. You can improve the editing part by doing some good canvas work (what I do is very basic), and make "RTP Forwarding + FFmpeg + YouTube Live authorization certificate" The "part becomes dynamic (for example, in terms of port and account usage) to support multiple streams and multiple events, but these details are all there.

Yes, I know what you are thinking: I mean, I'm using WebRTC for streaming, and it will eventually enter the YouTube live broadcast, but this is not a direct step. What I did was basically use the flexibility of Janus to process WebRTC streams, and use FFmpeg to actually broadcast in YouTube's "Ye Olde" way. Anyway, it is still cool! Using HTML5 canvas on the client side makes it easy to "edit" the streaming part in a certain way, and gives me a lot of creative freedom. In addition, using WebRTC still gives a good feeling!

50Ohm TS9 CRC9 Male Plug Connector For RG316 RG174 RF Coaxial Cable

Xi'an KNT Scien-tech Co., Ltd , https://www.honorconnector.com