Note: The author of this article is Jiang Haibing, the product director of the fun shoot, and the veteran of the live broadcast industry.

The fiery heat of the mobile broadcast industry will continue for a long time, and through integration with various industries, it will become an industry with unlimited possibilities. There are three main reasons:

First, the UGC production mode of mobile live broadcast is more obvious than the live broadcast of the PC. Everyone has equipment, which can be broadcast anytime and anywhere. It fully conforms to the open principle of the Internet era and can stimulate more people to create and disseminate high-quality content.

Second, the network bandwidth and speed are gradually increasing, and the network cost is gradually decreasing, providing an excellent development environment for mobile live broadcasting. Text, sound, video, games, etc. will all be presented in a live mobile broadcast, creating a richer user experience. Live broadcasts can be accessed into their own applications in the form of SDKs. For example, after-school tutoring in the field of education can be carried out in live broadcast, e-commerce or live broadcasts to allow users to select products and promote sales.

Third, a mobile live broadcast combined with VR/AR technology provides a new development space for the future of the entire industry. VR/AR live broadcast allows users to be immersive, driving the anchor and viewers closer to real interaction, greatly increasing the user engagement of the platform.

At the moment, Internet practitioners with technical strength and traffic advantages are not willing to miss the live broadcast. How to quickly build a live broadcast system has become a concern for everyone. I want to share my experience with you. I am working on a live broadcast product developer, and our products use the live broadcast SDK of the cloud service provider in order to quickly catch up with the market.

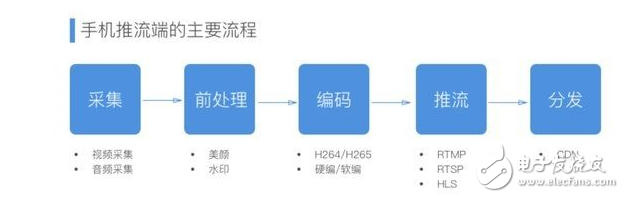

Practitioners know that a complete live broadcast product should include the following links: push-stream (acquisition, pre-processing, encoding, push), server-side processing (transcoding, recording, screenshot, yellowing), player (pull flow, Decoding, rendering), interactive system (chat room, gift system, praise). Below I will tell you about the work done in the live broadcast SDK.

1. What kind of work does the mobile live streaming terminal need to do?

The live streaming terminal is the anchor end. The video data is collected by the mobile phone camera and the microphone is used to collect audio data. After a series of pre-processing, encoding, encapsulation, and then streaming to the CDN for distribution.

1, collection

The mobile live SDK collects audio and video data directly from the mobile phone camera and microphone. Among them, the video sampling data generally adopts the RGB or YUV format, and the audio sampling data generally adopts the PCM format. The volume of the original audio and video collected is very large and needs to be processed by compression technology to improve transmission efficiency.

Attached: (Acquisition, iOS is relatively simple, Android is to do some model adaptation work, PC is the most troublesome various camera drivers, the problem is particularly difficult to deal with, it is recommended to give up the PC only supports mobile phone anchor, currently several The new live broadcast platform is like this.)

5.08mm Pitch

5.08mm Pitch

HuiZhou Antenk Electronics Co., LTD , https://www.atkconn.com