In this article, the author discusses eight algorithms for simple linear regression calculations in the Python environment, but does not discuss its performance, but compares its relative computational complexity.

For most data scientists, linear regression methods are the starting point for their statistical modeling and predictive analysis tasks. But we can't exaggerate the importance of linear models (fast and accurate) for fitting large data sets. As shown in this paper, in the linear regression model, the term "linear" refers to the regression coefficient, not the degree of the feature.

A feature (or independent variable) can be any degree, or even a transcendental funcTIon, such as an exponential function, a logarithmic function, or a sine function. Therefore, many natural phenomena can be approximated by these transformations and linear models, even when the output-function relationship is highly nonlinear.

On the other hand, because Python is rapidly evolving as the programming language of choice for data scientists, it is important to realize that there are many ways to fit large data sets with linear models. Equally important, data scientists need to evaluate the importance associated with each feature from the results obtained from the model.

However, is there only one way to perform linear regression analysis in Python? If there are multiple ways, how should we choose the most effective one?

Since Scikit-learn is a very popular Python library in machine learning, people often call linear models from this library to fit the data. In addition, we can also use the library's pipeline and FeatureUnion functions (such as: data normalization, model regression coefficient regularization, passing the linear model to the downstream model), but in general, if a data analyst only Need a quick and easy way to determine the regression coefficient (or some related statistical basic results), then this is not the fastest or the most succinct method.

While there are other faster and more concise methods, none of them provide the same amount of information and model flexibility.

Please read on.

The code for the various linear regression methods can be found in the author's GitHub. Most of them are based on the SciPy package.

Based on Numpy, SciPy combines mathematical algorithms with easy-to-use functions. SciPy significantly enhances Python's interactive sessions by providing users with advanced commands and classes for manipulating and visualizing data.

A brief discussion of the various methods follows.

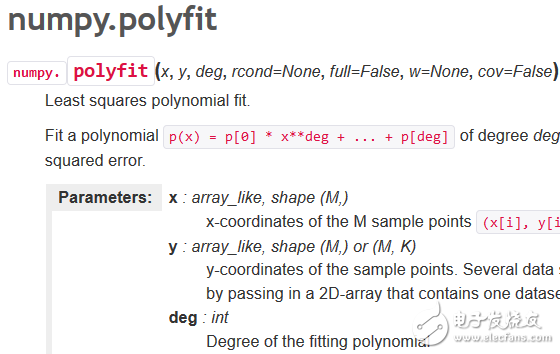

Method 1: Scipy.polyfit( ) or numpy.polyfit( )

This is a very general least squares polynomial fit function that applies to any degree dataset and polynomial function (specifically specified by the user) whose return value is an array of (minimized variance) regression coefficients.

For a simple linear regression, you can set the degree to 1. If you want to fit a model with a higher degree, you can also do this by building polynomial features from the linear feature data.

A detailed description is available at https://docs.scipy.org/doc/numpy-1.13.0/reference/generated/numpy.polyfit.html.

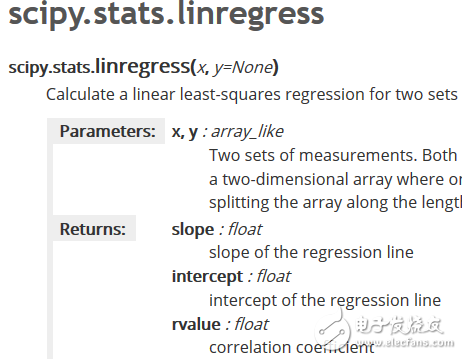

Method 2: stats.linregress( )

This is a highly specialized linear regression function in the statistics module in Scipy. Its flexibility is quite limited because it is optimized only for the least squares regression that calculates the two sets of measurements. Therefore, you can't use it to fit a general linear model, or use it for multivariate regression analysis. However, since the purpose of this function is to perform specialized tasks, this is one of the fastest methods when we encounter simple linear regression analysis. In addition to the fitted coefficients and intercept terms, it also returns basic statistical values ​​such as R2 coefficients and standard deviations.

Detailed description reference:

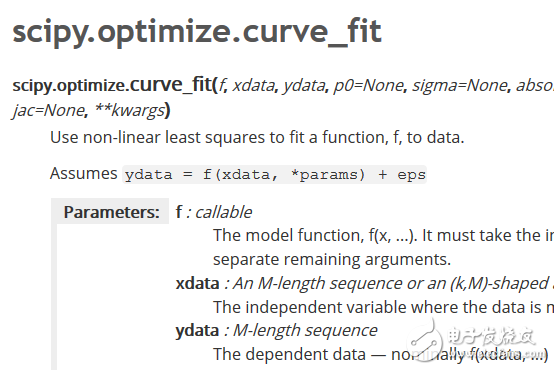

Method 3: opTImize.curve_fit( )

This method is similar to the Polyfit method, but is more fundamental at all. By performing least squares minimization, this powerful function from the scipy.opTImize module can fit any user-defined function to the dataset by least squares.

For a simple linear regression task, we can write a linear function: mx+c, which we call the estimator. It also applies to multivariate regression. It returns a sequence of function parameters that are the parameters that minimize the least squares value and the parameters of the associated covariance matrix.

Detailed description reference: https://docs.scipy.org/doc/scipy/reference/generated/scipy.opTImize.curve_fit.html

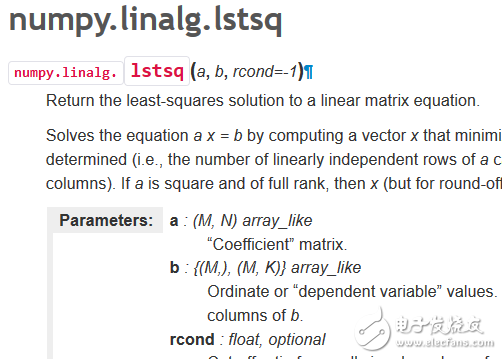

Method 4: numpy.linalg.lstsq

Stage Monitor Speaker,Active Stage Monitors,Stage Monitors,Floor Monitors Speaker,Small Stage Monitor Speakers,Passive Stage Monitors

NINGBO LOUD&CLEAR ELECTRONICS CO.,LIMITED , https://www.loudclearaudio.com