Embedded vision technology is no longer limited to highly specialized applications, it has a wide range of uses in all types of markets

Ten years ago, embedded vision technology was mainly used for relatively rare and highly specialized applications. Today, design engineers find use in visual applications in a growing number of emerging industrial, automotive and consumer electronics applications. Manufacturers have long relied on machine vision systems in industrial applications, but with the advent of advanced robotics and machine learning technologies and the shift to the industrial 4.0 manufacturing model, the boundaries of embedded vision applications are gradually expanding. The rapid development of electronics used in modern cars, especially Advanced Driver Assistance Systems (ADAS) and in-vehicle infotainment systems, has also created an opportunity for embedded video applications. Development engineers of consumer electronics solutions such as drones, gaming systems, surveillance and security saw the benefits of embedded vision technology. As the demand for intelligent features in network edge applications continues to rise, emerging AI solutions will increasingly rely on embedded vision technology.

We can already see many changes. First, many of the key components and tools that are critical to rapidly deploying low-cost embedded vision solutions are finally available. Today, design engineers can choose from a variety of lower cost processors with small size, high performance, and low power consumption. At the same time, design engineers benefit from the popularity of cameras and sensors thanks to the fast-growing mobile market. At the same time, improvements in software and hardware tools help simplify development and speed time to market. This article will explore how to use embedded vision technology, the reasons for using embedded vision technology, and which applications are most promising for embedded vision technology in the near future.

Stronger processing powerBy definition, an embedded vision system actually encompasses any device or system that performs image signal processing algorithms or vision system control software. A key part of the intelligent vision system is a high-performance computing engine for real-time high-definition digital video streaming, high-capacity solid-state storage, smart cameras or sensors, and advanced analysis algorithms. Processors in these systems can perform image acquisition, lens correction, image pre-processing and segmentation, target analysis, and various heurisTIcs functions. Embedded vision system design engineers use a variety of processors, including general purpose CPUs, vision processing units (GPUs), digital signal processors (DSPs), field programmable gate arrays (FPGAs), and specialized standard products designed for vision applications. (ASSP). The above processor architecture has obvious advantages and shortcomings. In many cases, design engineers integrate multiple processors into one heterogeneous computing environment. Sometimes the processor is integrated into a component. In addition, some processors use dedicated hardware to achieve the highest possible visual algorithm performance. Programmable platforms such as FPGAs provide design engineers with a highly parallel, computationally intensive application architecture and resources for other applications such as I/O expansion.

In terms of cameras, embedded vision system design engineers use analog cameras and digital image sensors. Digital image sensors are typically CCD or CMOS sensor arrays that require a visible light environment. Embedded vision systems can also be used to sense other data such as infrared, ultrasonic, radar and lidar.

More and more design engineers are turning to "smart cameras" with cameras or sensors as the core of all edge electronics in the vision system. Other systems transfer sensor data to the cloud to reduce the load on the system processor, minimizing system power, footprint and cost in the process. However, this approach will be problematic when critical decisions based on image sensor data for low latency are needed.

Take advantage of mobilityAlthough embedded vision solutions have been around for many years, the speed of the technology has been limited by many factors. First and foremost, the key elements of this technology have not yet been realized in a cost-effective manner. In particular, a computing engine capable of processing high-definition digital video streams in real time has not yet become popular. The limitations of high-capacity solid-state storage and advanced analytics algorithms also present challenges.

Three recent development trends in the market are expected to revolutionize the face of embedded vision systems. First, the rapid growth of the mobile market provides embedded visual design engineers with a vast array of processor options that provide relatively high performance at low power. Second, the MIPI Alliance's Mobile Industry Processor Interface (MIPI) provides design engineers with an alternative to using standard-compliant hardware and software components to build innovative, cost-effective embedded vision solutions. Finally, the proliferation of low-cost sensors and cameras for mobile applications has helped embedded vision system design engineers achieve better solutions and reduce costs.

Industrial applicationMachine vision systems in industrial applications have long been one of the most promising applications in the field of embedded vision. Machine vision technology is one of the most mature and numerous applications. It is widely used in manufacturing processes and quality management applications. Typically, manufacturers in these applications use a compact vision system consisting of one or more smart cameras and processor modules. Analysts at Transparency Market Research predict that the machine vision market will grow from $15.7 billion in 2014 to $28.5 billion (2021).

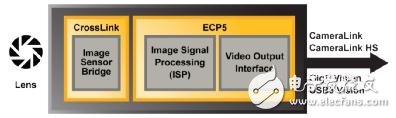

Today, design engineers have found many new applications with limited potential for this technology. For example, the machine vision smart camera in Figure 1 is well suited for monitoring manufacturing equipment on a production line. Design engineers can use FPGAs to implement image sensor bridging, use as a complete camera image signal processing pipeline (ISP), and provide interconnect solutions such as GeVision or USB Vision.

Figure 1: FPGA in a machine vision smart camera

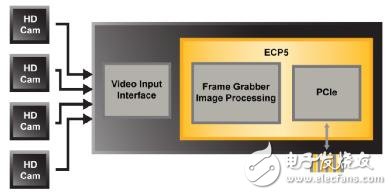

Another example is an FPGA-based video capture card (Figure 2) that aggregates data from multiple cameras and performs image pre-processing, which then sends the data to the host processor via the PCIe interface.

Figure 2: Video capture card

As more and more manufacturers adopt the Industry 4.0 model, the demand for vision systems in the industrial market will also grow. In this new era, manufacturers will integrate advanced robotics, machine learning, 3D depth mapping and industrial Internet of Things (IIoT) to improve organization and productivity. In an article published in 2011 entitled "Industry 4.0 - Smart Manufacturing in the Future", it was first proposed that Industry 4.0 represents the fourth stage in the history of the industrial revolution. In 2012, the Industry 4.0 Working Group presented a set of implementation recommendations to the German federal government.

The first phase of the industrial revolution, Industry 1.0 introduced water and steam powered machinery in the manufacturing industry. The first-ever electric drive mass production technology in the labor division environment laid the foundation for Industry 2.0. The third era of industry began with the use of computers, and first entered the field of automation, and gradually replaced humans with automated machines on the assembly line.

Today, manufacturers expect Industry 4.0 to help them achieve another leap and further increase productivity. Driven by the concept of “smart factoryâ€, Industry 4.0 will introduce physical network systems to monitor the production process of smart factories and achieve decentralized decisions. This model will transform the industry toward digital transformation by integrating big data and analytics, IT and IoT convergence, the latest advances in robotics, and the iterative development of digital supply chains. Finally, through the Internet, these physical systems will enable system interaction and human-computer interaction as part of the Industrial Internet of Things (IIoT).

What would an industry 4.0 smart factory look like? First, it will provide a wide range of interoperability and high levels of communication for machines, equipment, sensors and personnel. Second, it will have a high degree of information transparency, and the system creates a virtual mapping of the physical world through sensor data, making the information contextualized. Third, the decision-making behavior of smart factories will be highly dispersed, and the physical network system will operate as autonomously as possible. Fourth, these factories will provide a high level of technical assistance, and the system can help each other solve problems, make decisions and help humans accomplish tasks that are too difficult or dangerous. In almost all cases, manufacturers will rely more on embedded vision systems than ever before.

Of course, manufacturers adopting this new model will also face many challenges. They must develop embedded vision systems with extremely high reliability and very low latency to ensure proper physical network communication. Industry 4.0 will force manufacturers to maintain the integrity of the production process while reducing human regulation. When they deploy these new systems, they face the problem of insufficient senior staff. But the benefits far outweigh the challenges. Industry 4.0 will significantly improve the health and safety of workers in hazardous work environments. Data from all levels of the manufacturing process helps manufacturers control their supply chains more easily and efficiently.

Automotive applicationIn view of the rapid growth of electronic applications in the automotive field, the automotive market is undoubtedly the most promising development area for embedded vision applications. The introduction of advanced driver assistance systems and infotainment capabilities is expected to rapidly drive growth in related markets. Analysts at Research and Markets.com predict that the ADAS market will grow at a compound annual growth rate of 10.44% between 2016 and 2021. The most commonly used embedded vision product in these applications is the camera module. Suppliers either develop their own analysis tools and algorithms or use third-party IP from external development engineers. One of the emerging automotive applications is the driver monitoring system, which uses visual applications to track driver's head and body movements for fatigue status recognition. The other is the vision system that improves vehicle safety by monitoring potential driver distractions such as text messages or diet.

But the visual system in the car can do much more than monitor what is happening inside the car. From 2018 onwards, some national regulations will require new vehicles to be equipped with rearview cameras to help drivers see the rear of the vehicle. New applications like lane departure warning systems combine video and lane detection algorithms to assess the position of the car. In addition, market demand has also promoted the development of reading warning signs, impact buffers, blind spot detection, automatic parking, and reverse assistance. All of these features help make driving safer.

The development of automotive vision and sensing systems has laid the foundation for true autonomous driving. For example, Cadillac will integrate its embedded vision subsystem into the CT6 sedan in 2018 to achieve the industry's first hands-free driving technology, SuperCruise. By continuously analyzing driver and road conditions, the precise LIDAR database provides road conditions, while advanced cameras, sensors and GPS real-time reflect the dynamics of the road.

Puff Flex is a new premium prefilled Disposable Vape device that is bigger and better, it's compact, portable and consist of up to 2800+ puffs. As one of the puff brand in the Puff vape family with adjustable airflow control. Puff Flex can be said that it's the successor of the original Puff plus and puff Xxl with a 1500Mah battery to support this high range puff device. Puff Flex is designed for a fast-paced lifestyle and enhanced by unique flavours. It offers you a smooth vaping experience right from your purchase down to your last puff leading to a very satisfying vaping experience.

Puff Flex,Puff Flex Vape,Puff Flex 2800,Puff Flex Disposable

Shenzhen Kate Technology Co., Ltd. , http://www.katevape.com